Reading Assistance for DHH Technology Workers

User-interface and text-simplification research to support DHH readers...

User-interface and text-simplification research to support DHH readers...

User-interface and computer-vision research for searching ASL dictionaries...

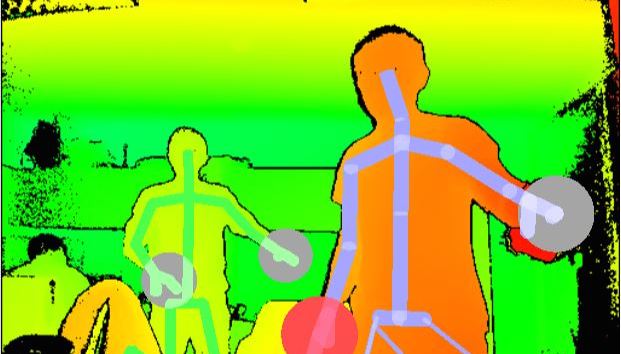

Video and motion-capture recordings collected from signers for linguistic research...

Can design practice incorporate reflective tools to raise awareness of social aspects of accessibility....

How can new technologies support captioning for online video or live meetings...

Comparing the effectiveness of methods for teaching computing students about accessibility...

Designing new pedagogical techniques for including accessibility in higher education curricula...

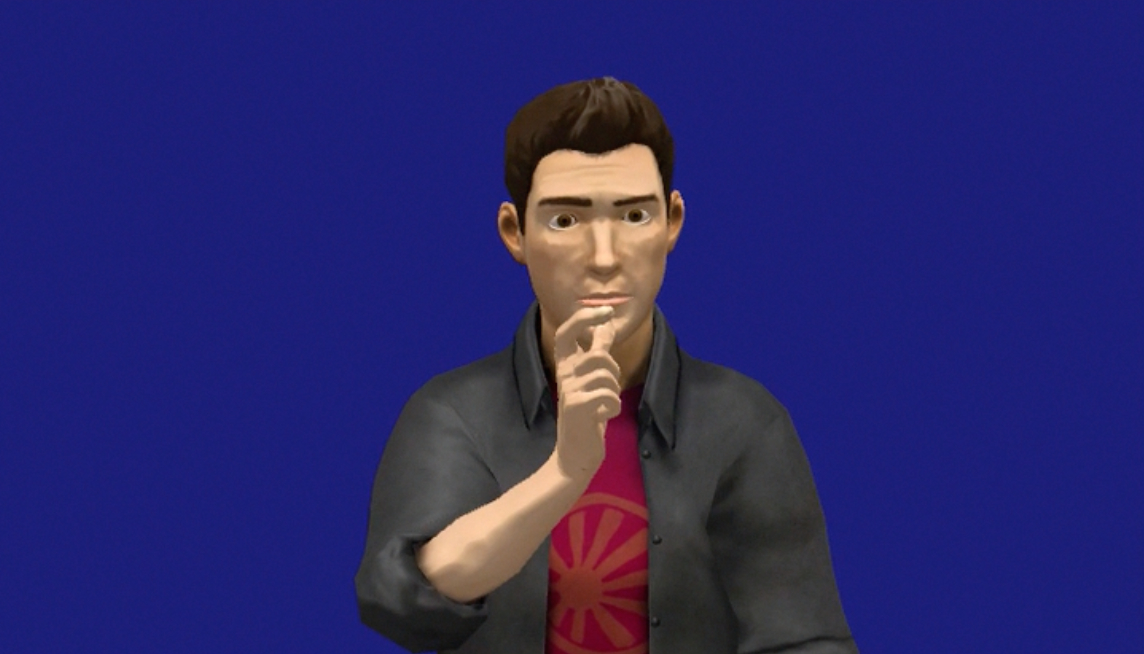

Enabling students learning ASL to practice independently through a tool that provides feedback...

Collecting a motion-capture corpus of ASL and modeling data to produce accurate animations...

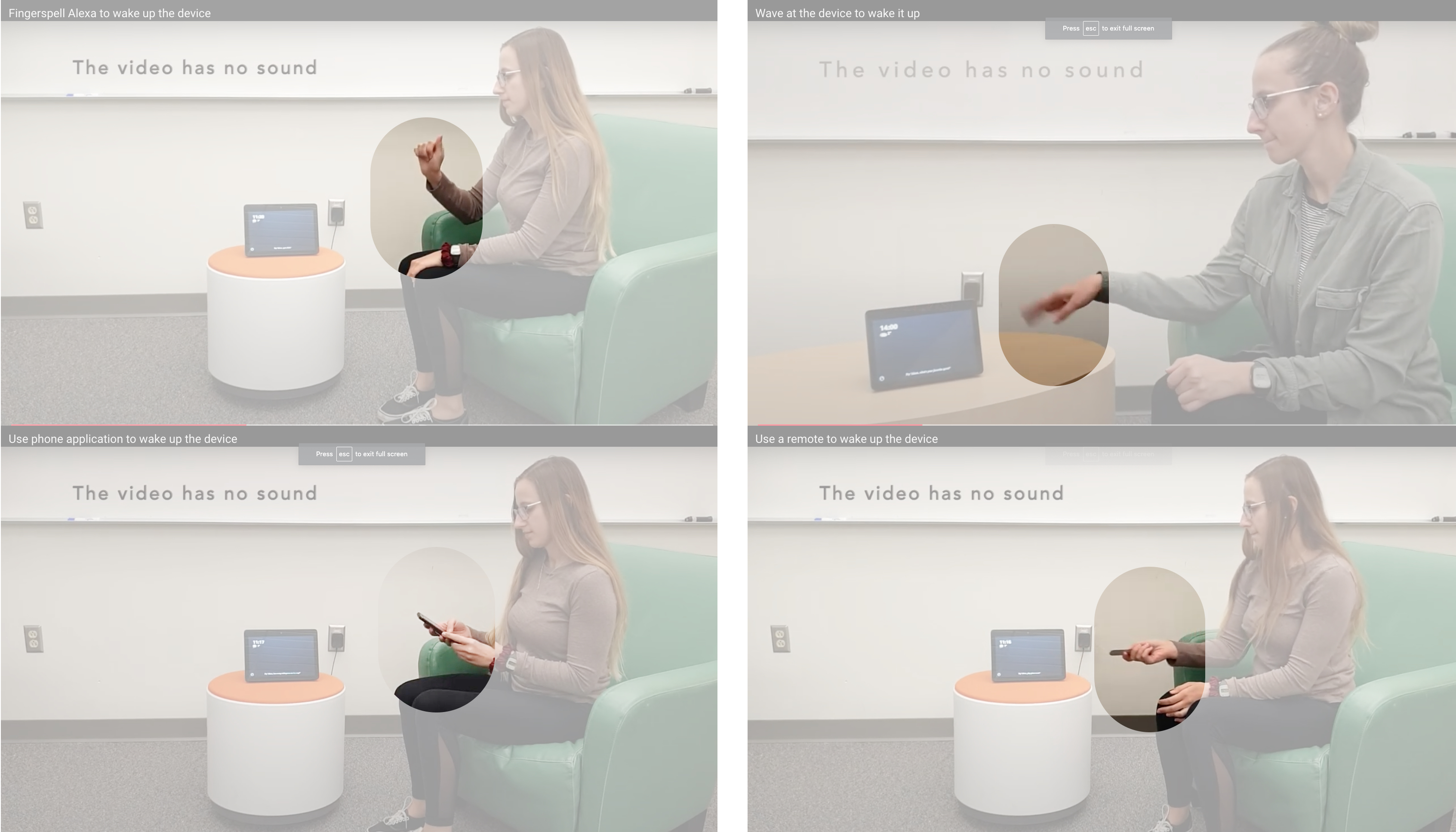

Understanding how DHH users would interact in ASL with personal assistants...

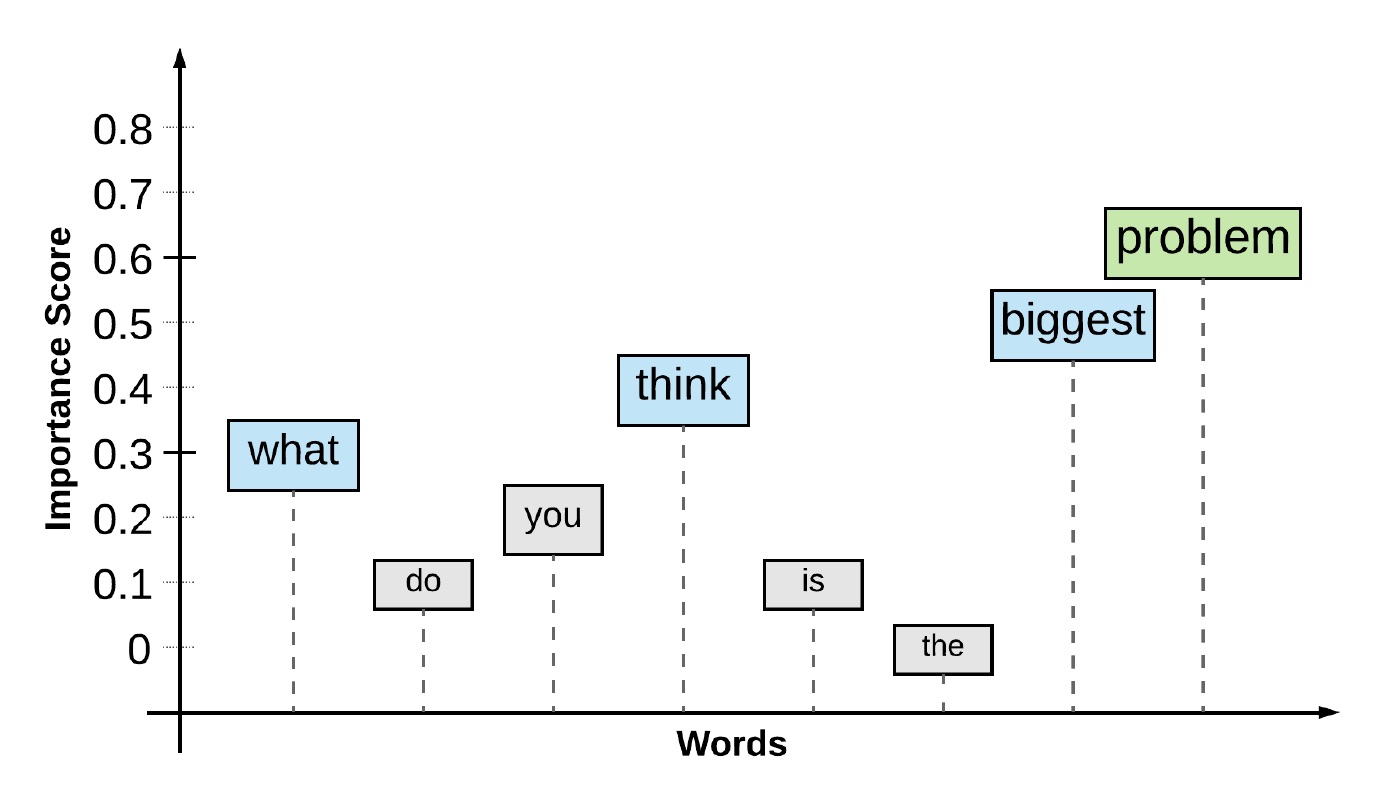

Can we determine automatically which words are most important to the meaning of text captions...

Animated ASL can produce useful perceptual stimuli for linguistic research experiments...

Can can we best conduct empirical research on assistive technologies with DHH users...

Analyzing eye-movements to automatically predict when a user does not understand content...

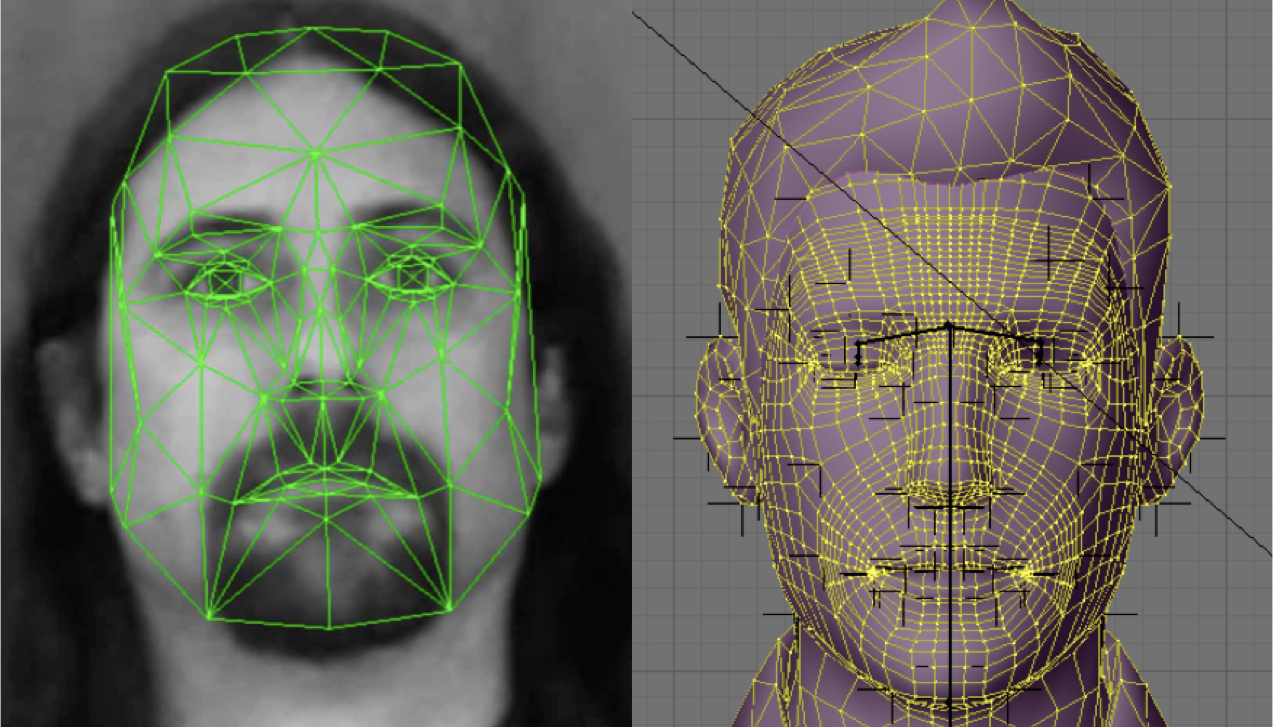

Producing linguistically accurate facial expressions for natural and understandable ASL animations...

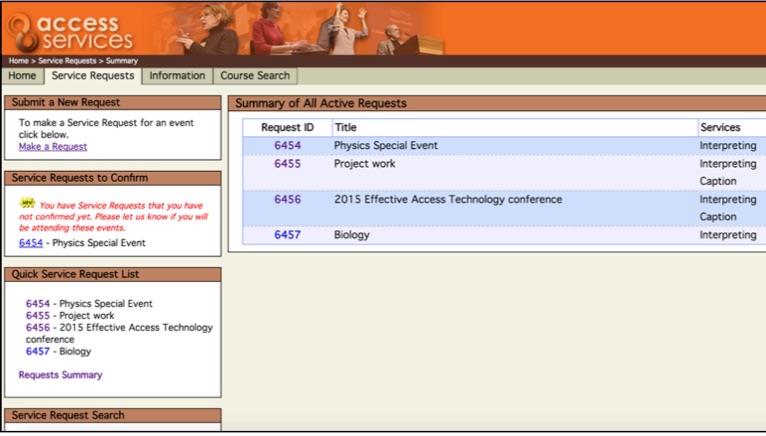

How can we enhance the usability of university websites for requesting access services...

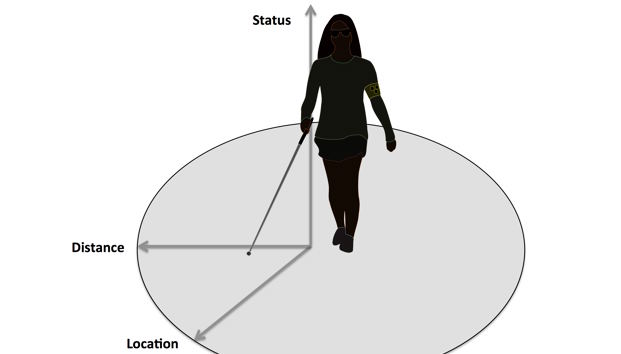

Using situational awareness techniques to evaluate navigation technologies for blind travelers...

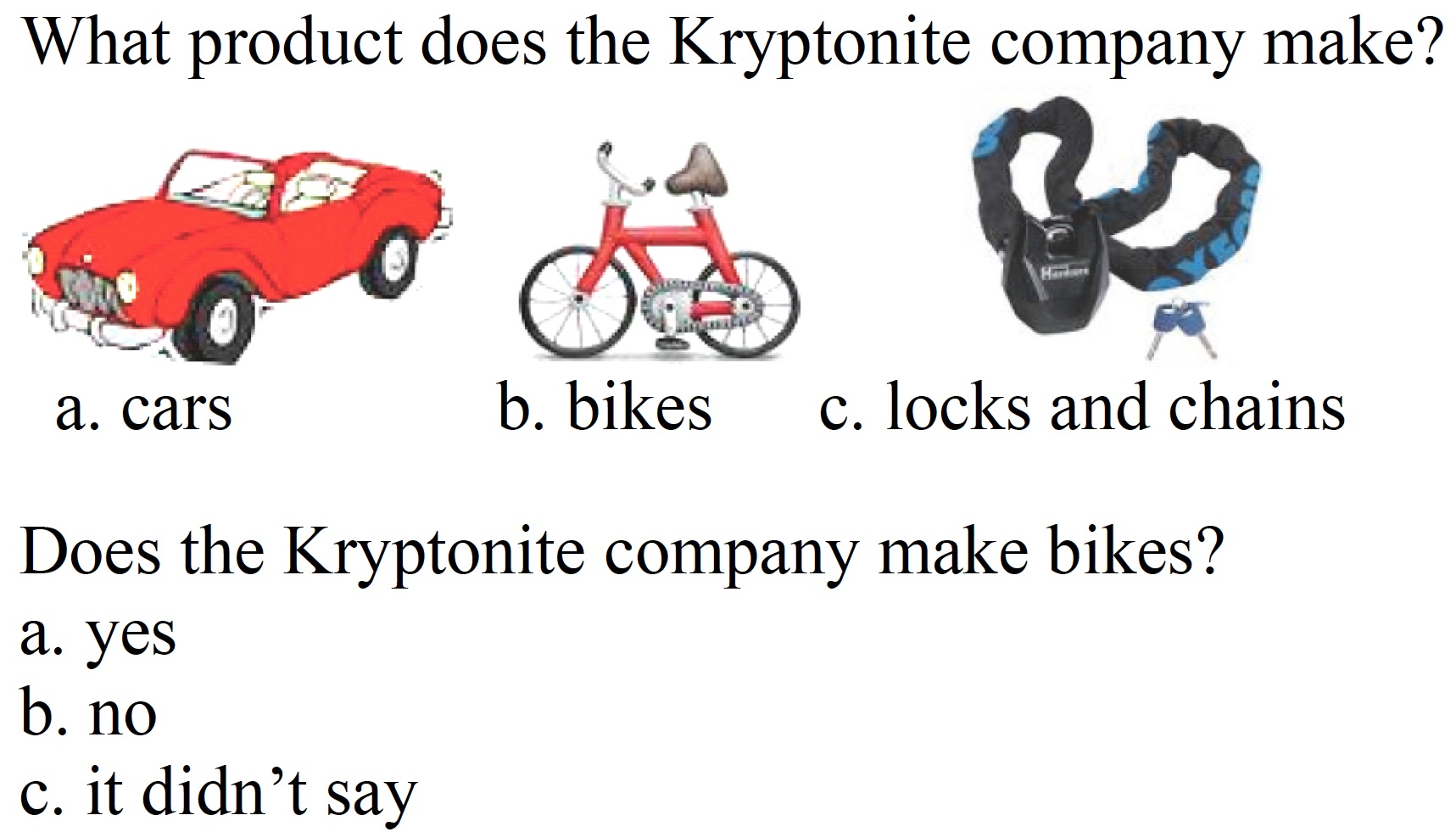

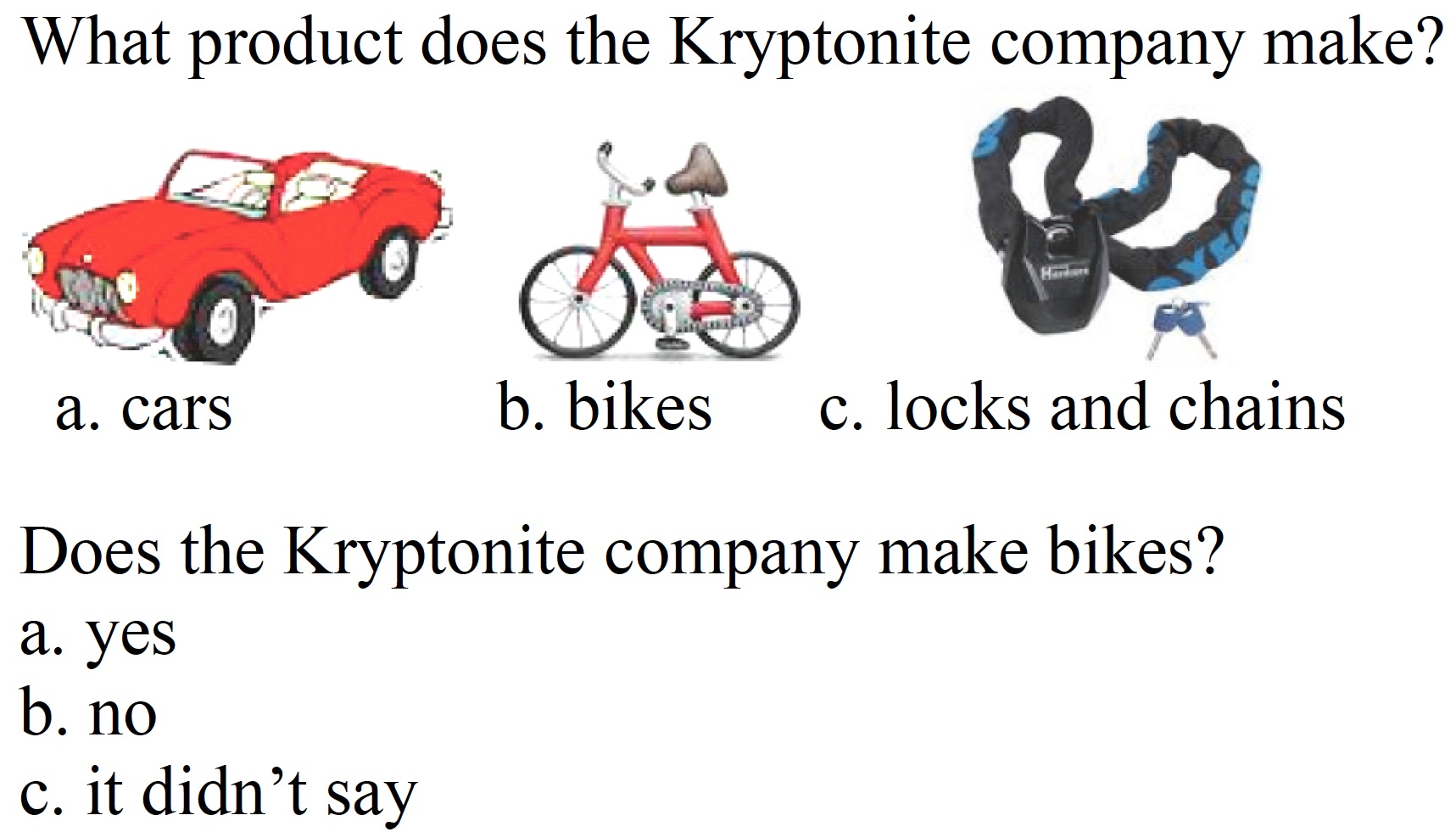

Analyzing English text automatically to identify the difficulty level of the content for users...

We investigate the usability and utility of resources available to speech language therapists...

Enhancing the classroom experience for students who are deaf and hard-of-hearing...

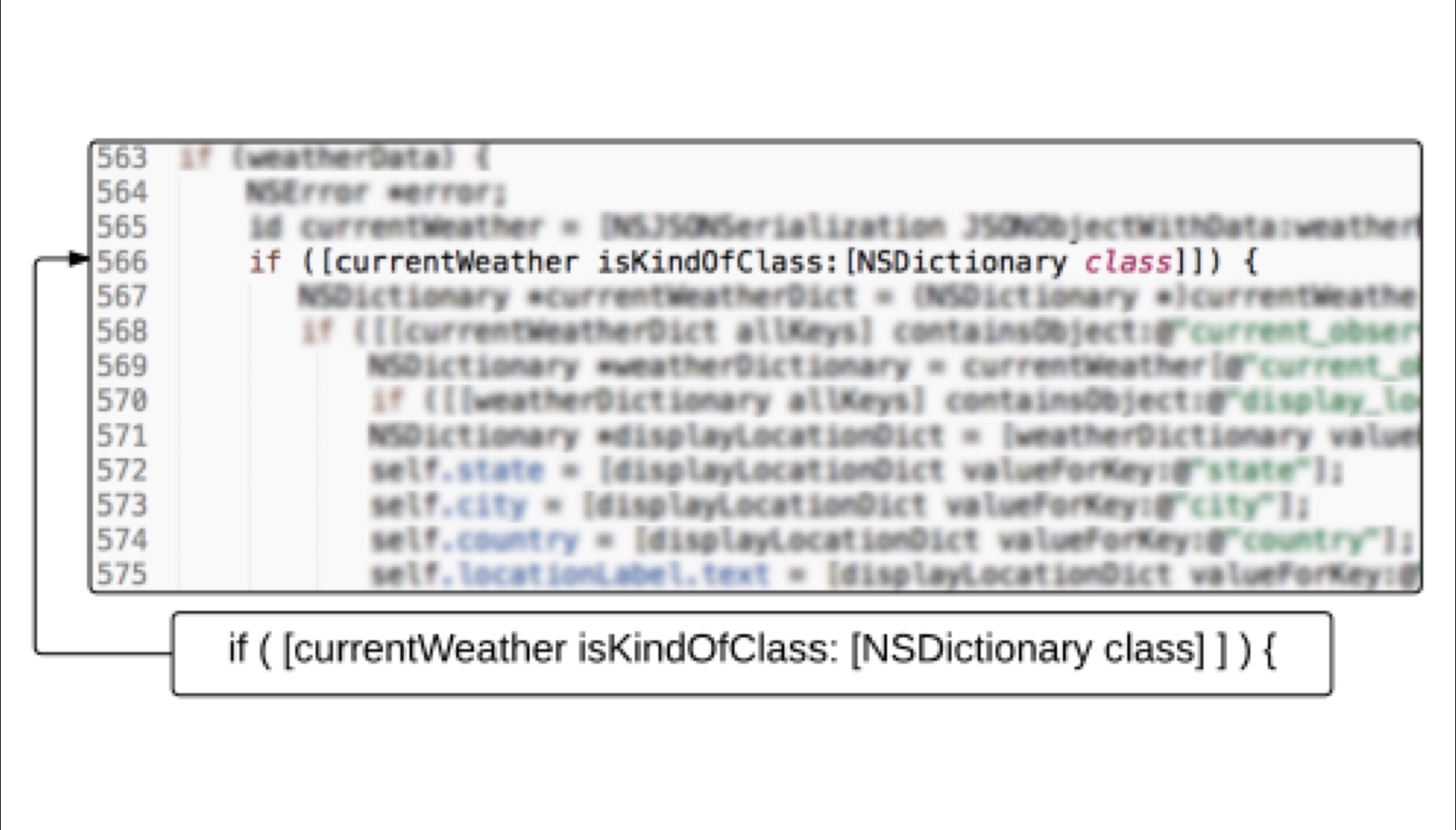

Understanding the requirements of blind programmers and creating useful tools for them...

While there is a shortage of computing and information technology professionals in the U.S., there is underrepresentation of people who are Deaf and Hard of Hearing (DHH) in such careers. Low English reading literacy among some DHH adults can be a particular barrier to computing professions, where workers must regularly "upskill" to learn about rapidly changing technologies throughout their career.

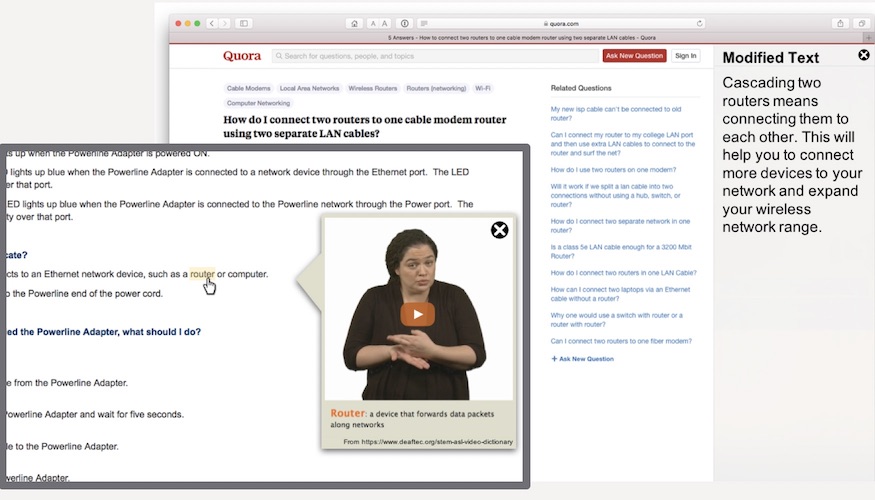

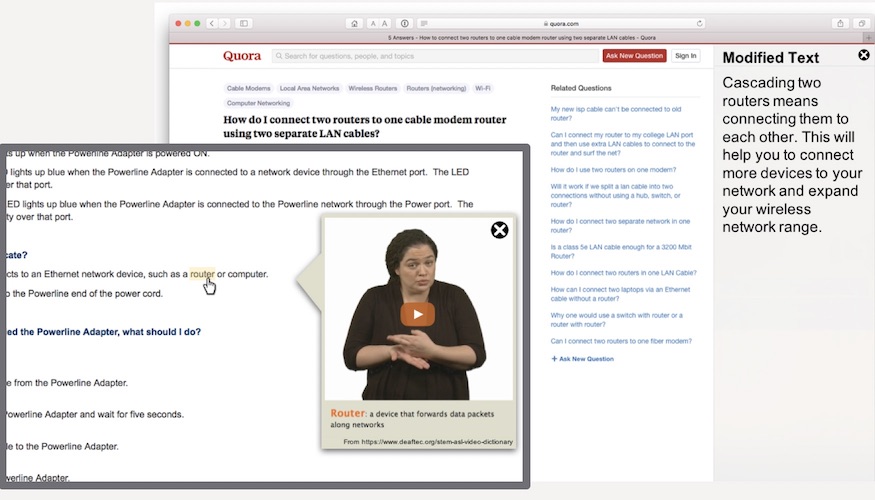

We investigate the design of a web-browser plug-in to provide automatic English text simplification (on-demand) for DHH individuals, including providing simpler synonyms or sign-language videos of complex English words or simpler English paraphrases of sentences or entire documents. By embedding this prototype for use by DHH students as they learn about new computing technologies for workplace projects, we will evaluate the efficacy of our new technologies.

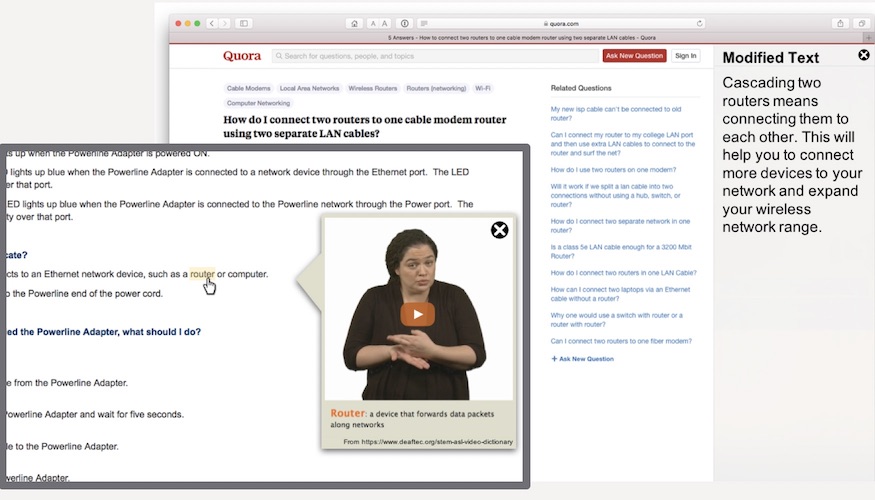

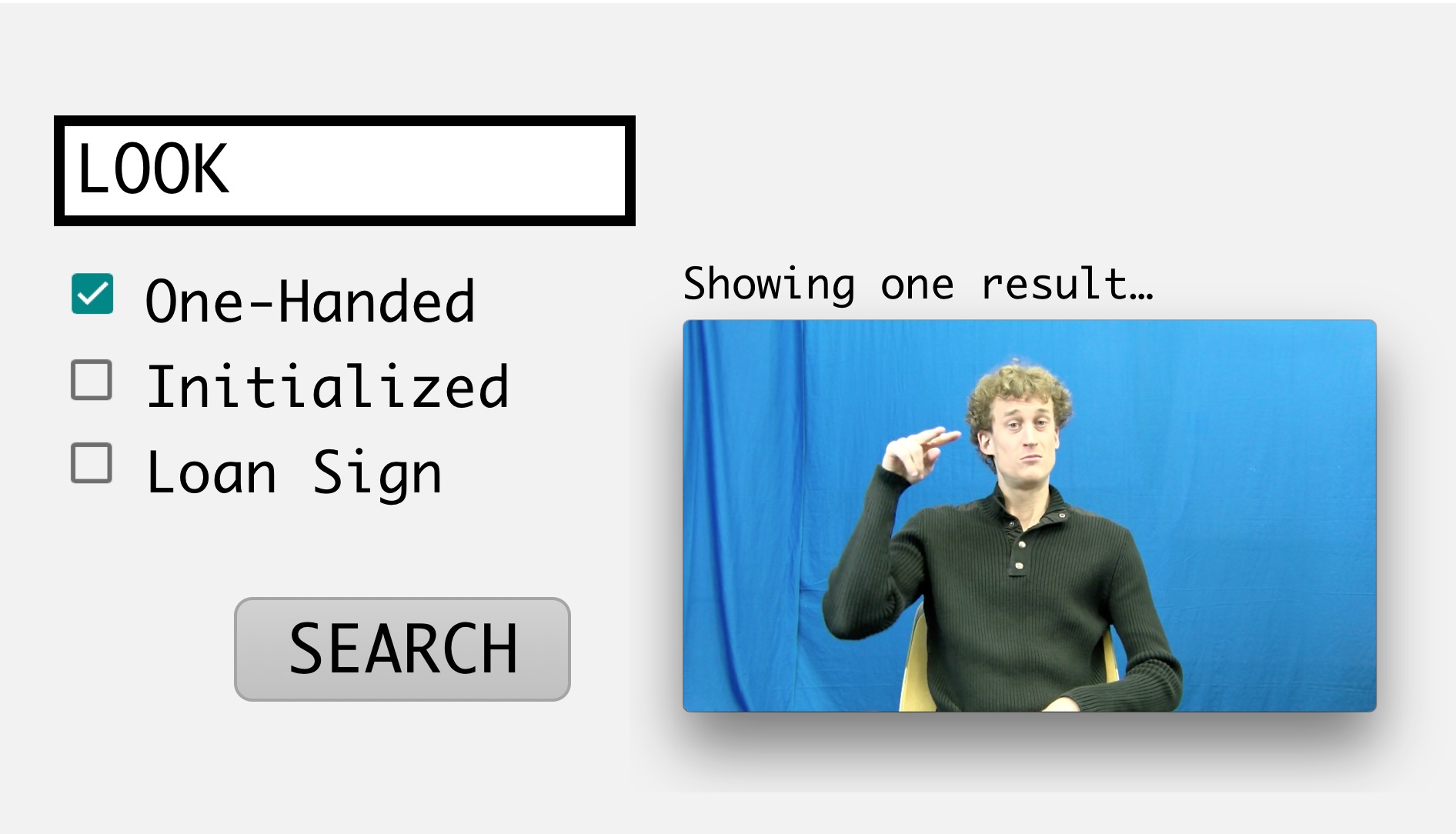

Looking up an unfamiliar word in a dictionary is a common activity in childhood or foreign-language education, yet there is no easy method for doing this in ASL. In this collaborative project, with computer-vision, linguistics, and human-computer interaction researcher, we will develop a user-friendly, video-based sign-lookup interface, for use with online ASL video dictionaries and resources, and for facilitation of ASL annotation.

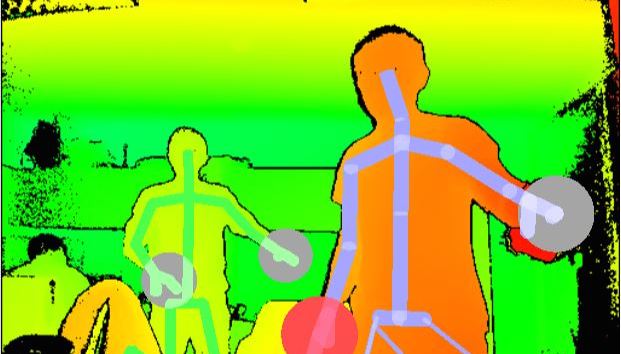

Huenerfauth and his students are collecting video and motion-capture recordings of native sign-language users, in support of linguistic research to investigate the extent to which signed languages evolved over generations to conform to the human visual and articulatory systems, and the extent to which the human visual attention system has been shaped by the use of a signed language within the lifetime of an individual signer.

This project investigates how design practice can best incorporate reflective tools and techniques designed to raise awareness of social aspects of accessibility.

Findings from this research informed the Design for Social Accessibility (DSA) perspective, including the creation of the DSA Method Cards for use in the design process. These method cards should be used with people with and without disabilities in the design process, to elicit effective ideas and feedback in creating accessible solutions.

We are pleased to make available PDFs of our DSA Method Cards free to share, use, and redistribute with attribution, no derivatives (CC BY-ND 2019 University of Washington and Rochester Institute of Technology). Below, we have links to two different sizes, one for Tabloid size (for printing on 11"x17" cardstock or paper) and one for Letter size (for printing on 8.5"x11" cardstock or paper). Print out the PDFs and cut the cards for use, or use the PDFs as they are.

Please send comments, questions or feedback to: kristen (dot) shinohara (at) rit (dot) edu.

What are the requirements and preferences of Deaf and Hard of Hearing users for captioning technology for video programming or for real-time captioning in live meetings? We are investigating the preferences of users for new video captioning services and the design of a tool to caption live one-on-one meetings using imperfect automatic speech recognition (ASR) technology, including how to best convey when the ASR system is not confident it has recognized the words - so that users know when they can trust the captions.

Matt Huenerfauth (PI). October 2018 to September 2023. Twenty-First Century Captioning Technology, Metrics and Usability. Department of Health and Human Services - Administration for Community Living - National Institute on Disability, Independent Living, and Rehabilitation Research (NIDILRR) - Disability and Rehabilitation Research Projects (DRRP) program. Sub-award to RIT: $599,881.

Matthew Seita (student fellowship recipient), Matt Huenerfauth (faculty advisor). September 2018 to August 2023. National Science Foundation Graduate Research Fellowship (NSF-GRF) to Larwan Berke. Amount of funding: Tuition and stipend for three years, approximate value: $138,000.

Matt Huenerfauth (PI). February 2017 to February 2018. Identifying the Best Methods for Displaying Word- Confidence in Automatically Generated Captions for Deaf and Hard-of-Hearing Users. Google Faculty Research Awards Program. Amount of funding: $56,902.

Larwan Berke (student fellowship recipient), Matt Huenerfauth (faculty advisor). September 2017 to August 2020. National Science Foundation Graduate Research Fellowship (NSF-GRF) to Larwan Berke. Amount of funding: Tuition and stipend for three years, approximate value: $138,000.

Matt Huenerfauth and Michael Stinson, PIs. September 2015 to August 2017. “Creating the Next Generation of Live-Captioning Technologies.” Internal Seed Research Funding, Office of the President, National Technical Institute for the Deaf, Rochester Institute of Technology.

Matt Huenerfauth, PI. Start-Up Research Funding, Golisano College of Computing and Information Sciences, Rochester Institute of Technology.

This project examines the effectiveness of a variety of methods for teaching computing students about concepts related to computer accessibility for people with disabilities. This multi-year project will include longitudinal testing of students two years after the instruction to search for lasting impacts.

This national initiative among technology companies and universities is promoting accessibility education in university computing degrees.

This project is joint work among Stephanie Ludi, Vicki Hanson, Kristen Shinohara, and Matt Huenerfauth.

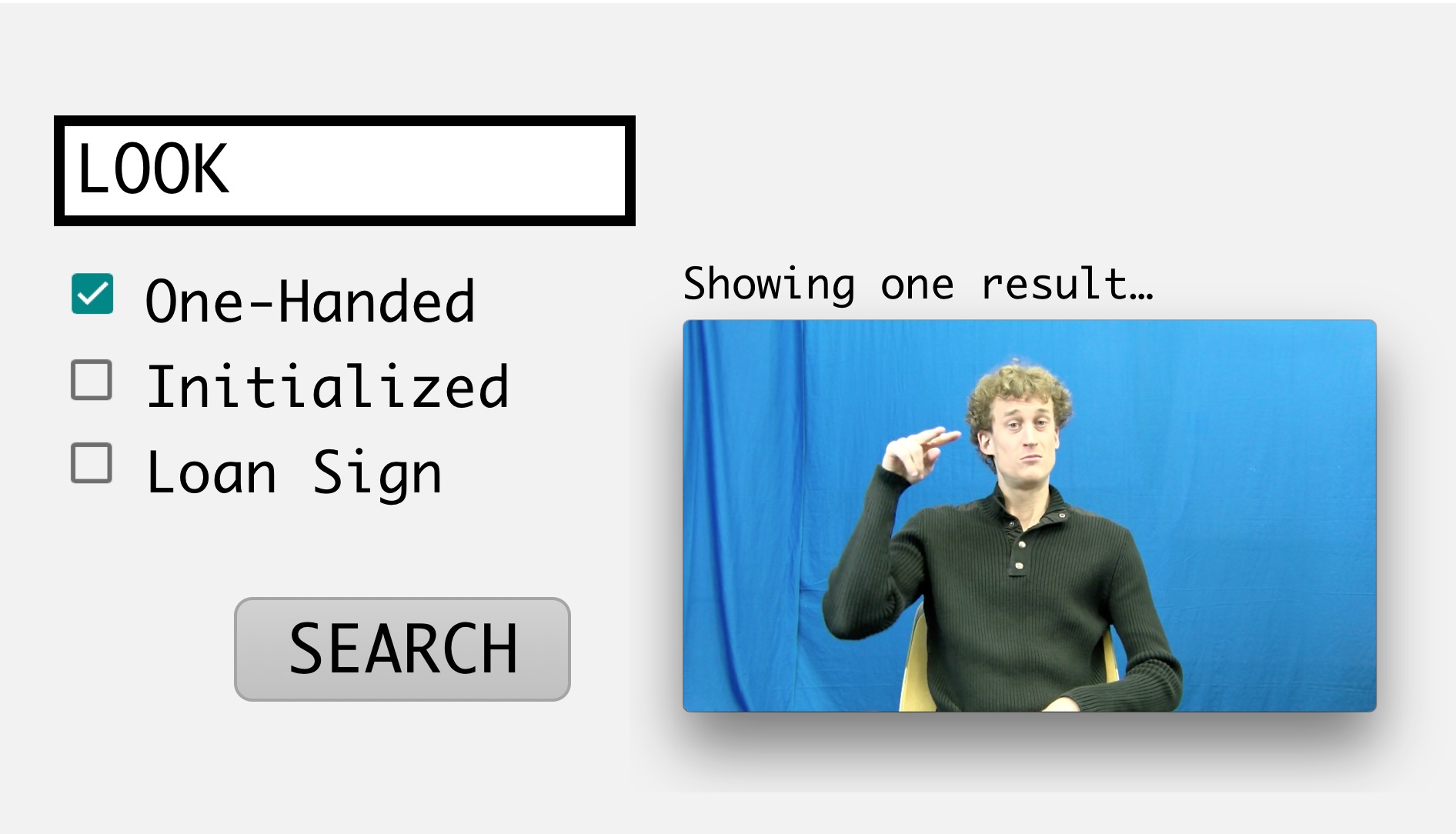

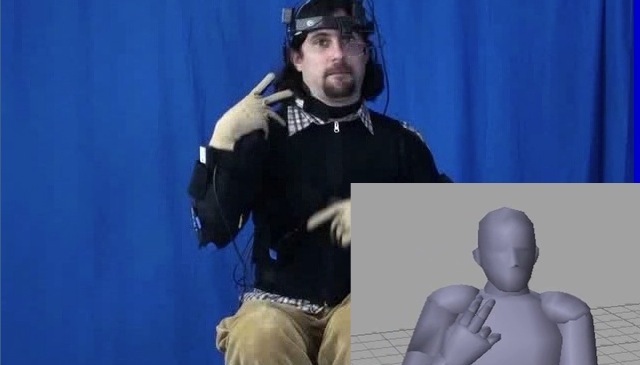

We are investigating new video and motion-capture technologies to enable students learning American Sign Language (ASL) to practice their signing independently through a tool that provides feedback automatically.

This project is joint work with City University of New York, City College and Hunter College.

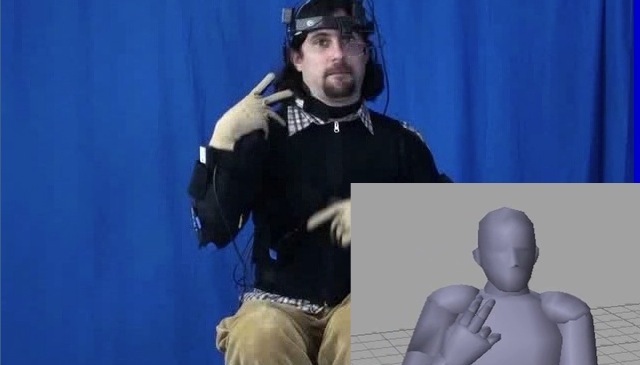

This project is investigating techniques for making use of motion-capture data collected from native ASL signers to produce linguistically accurate animations of American Sign Language. In particular, this project is focused on the use of space for pronominal reference and verb inflection/agreement.

This project also supported a summer research internship program for ASL-signing high school students, and REU supplements from the NSF have supported research experiences for visiting undergraduate students.

The motion-capture corpus of American Sign Language collected during this project is available for non-commercial use by the research community.

This project is conducted by Matt Huenerfauth and his students.

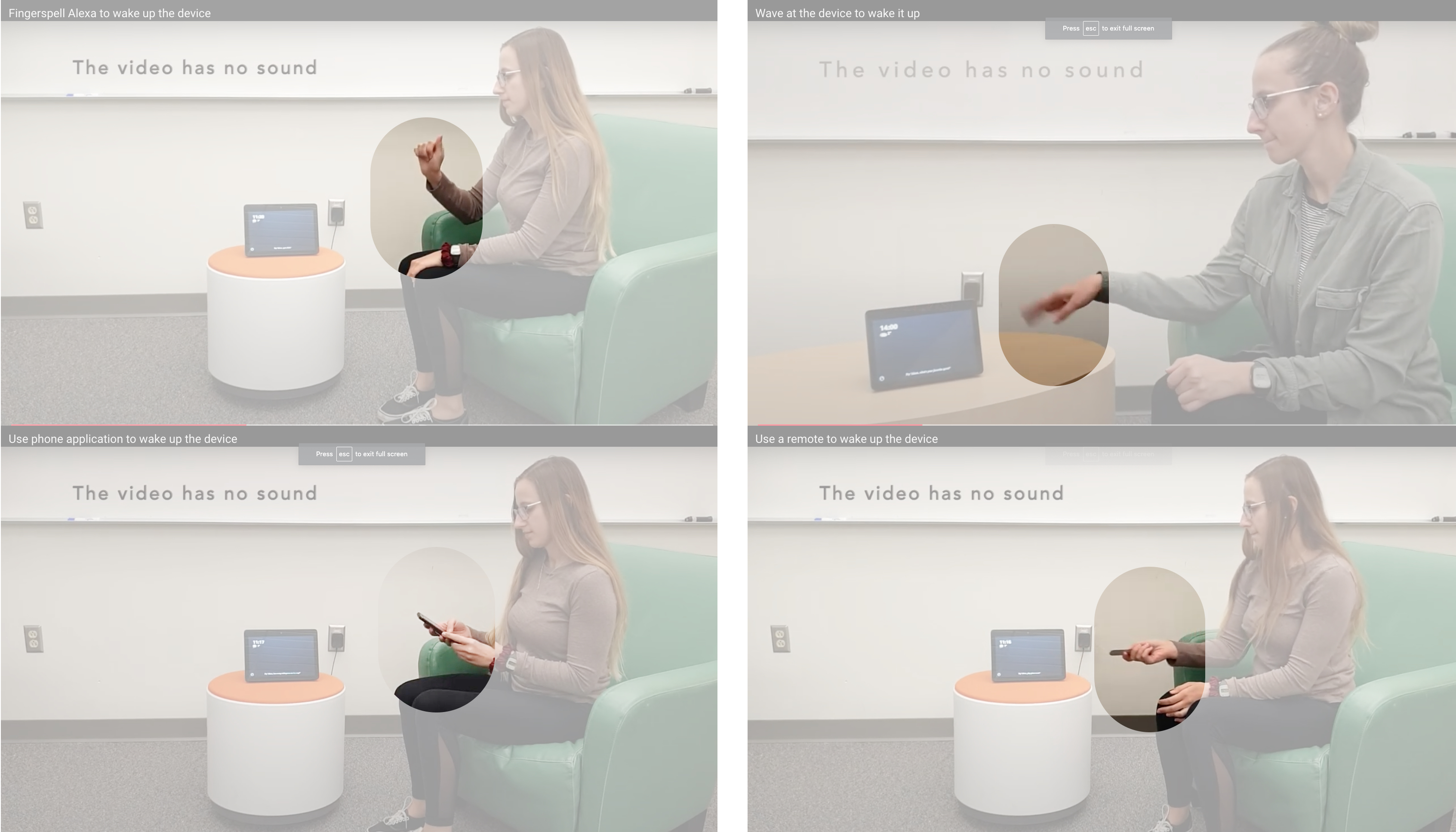

This project focuses on understanding how Deaf and Hard of Hearing (DHH) users may use personal-assistant devices, if they could use sign language to interact with them.

Specifically, we are collecting sign-language video and motion-capture of native American Sign Language (ASL) signers giving commands to (traditionally voice-based) personal assistants or smart speakers. There is a lot of concern in the DHH community about the proliferation of these voice-based technologies in recent years, which make it harder for many DHH users to access popular consumer technology. Having systems that could recognize ASL (at least for the set of common commands for such systems) would be useful, but the bottleneck in the field is a lack of video and motion data (with linguistic annotation) to train machine-learning models to do this recognition of human signing from a video. An extrinsic goal of this project is to create a high-quality, metadata-annotated, publicly-shareable, curated dataset of ASL video and motion-capture data, with diverse signers, focused on a useful application (interacting with personal assistants), to enable video-based ASL recognition research.

This project is supported by an Artificial Intelligence for Accessibility (AI4A) award from Microsoft.

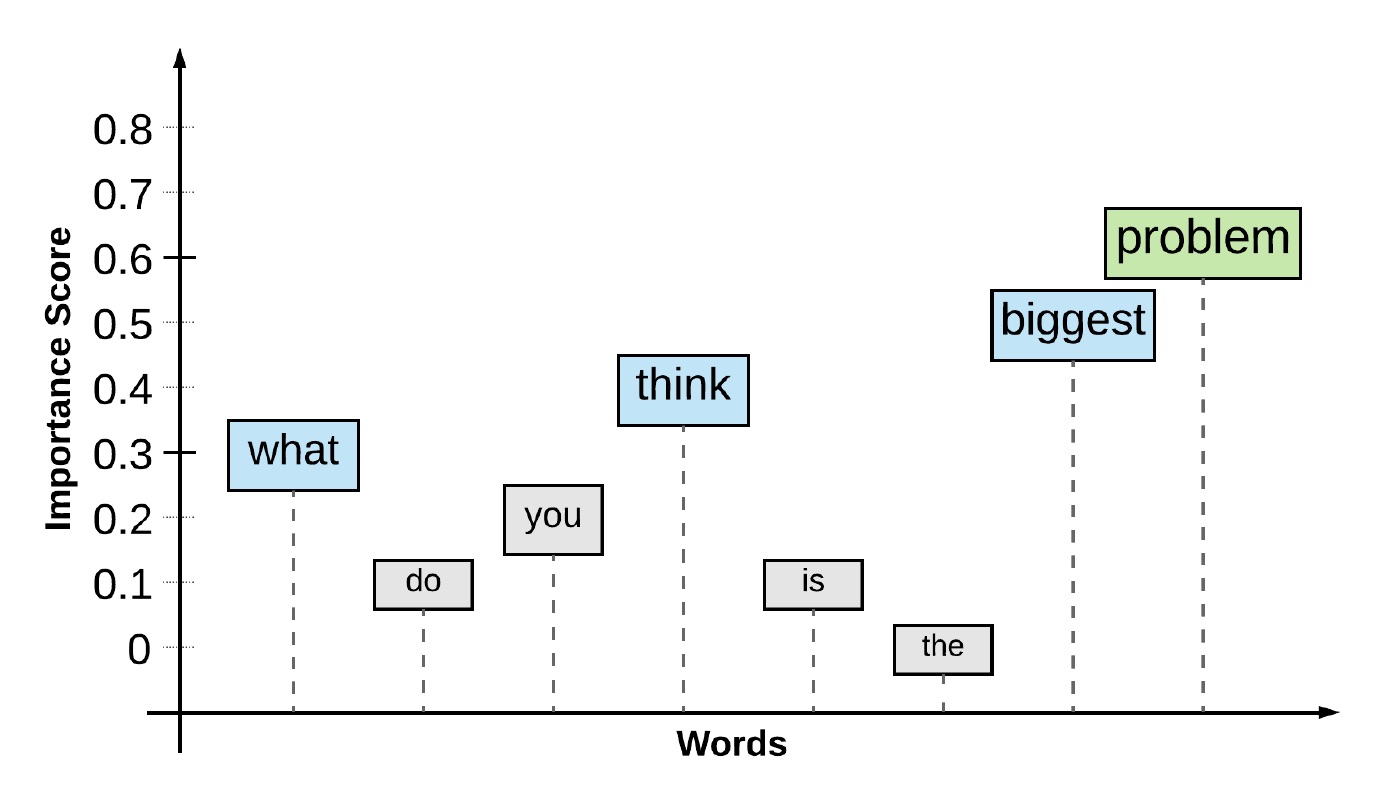

The accuracy of Automated Speech Recognition (ASR) technology has improved, but it is still imperfect in many settings. In order to evaluate the usefulness of captions for Deaf or Hard of Hearing (DHH) users based on ASR, simply counting the number of errors is insufficient, since some words contribute more to the meaning of the text.

We are studying methods for automatically predicting the importance of individuals words in a text, for DHH users in a captioning context, and we are using these models to develop alternative evaluation metrics for analyzing ASR accuracy, to predict how useful ASR-based captions would be for users.

Matt Huenerfauth and Michael Stinson, PIs. September 2015 to August 2017. “Creating the Next Generation of Live-Captioning Technologies.” Internal Seed Research Funding, Office of the President, National Technical Institute for the Deaf, Rochester Institute of Technology.

Matt Huenerfauth, PI. Start-Up Research Funding, Golisano College of Computing and Information Sciences, Rochester Institute of Technology.

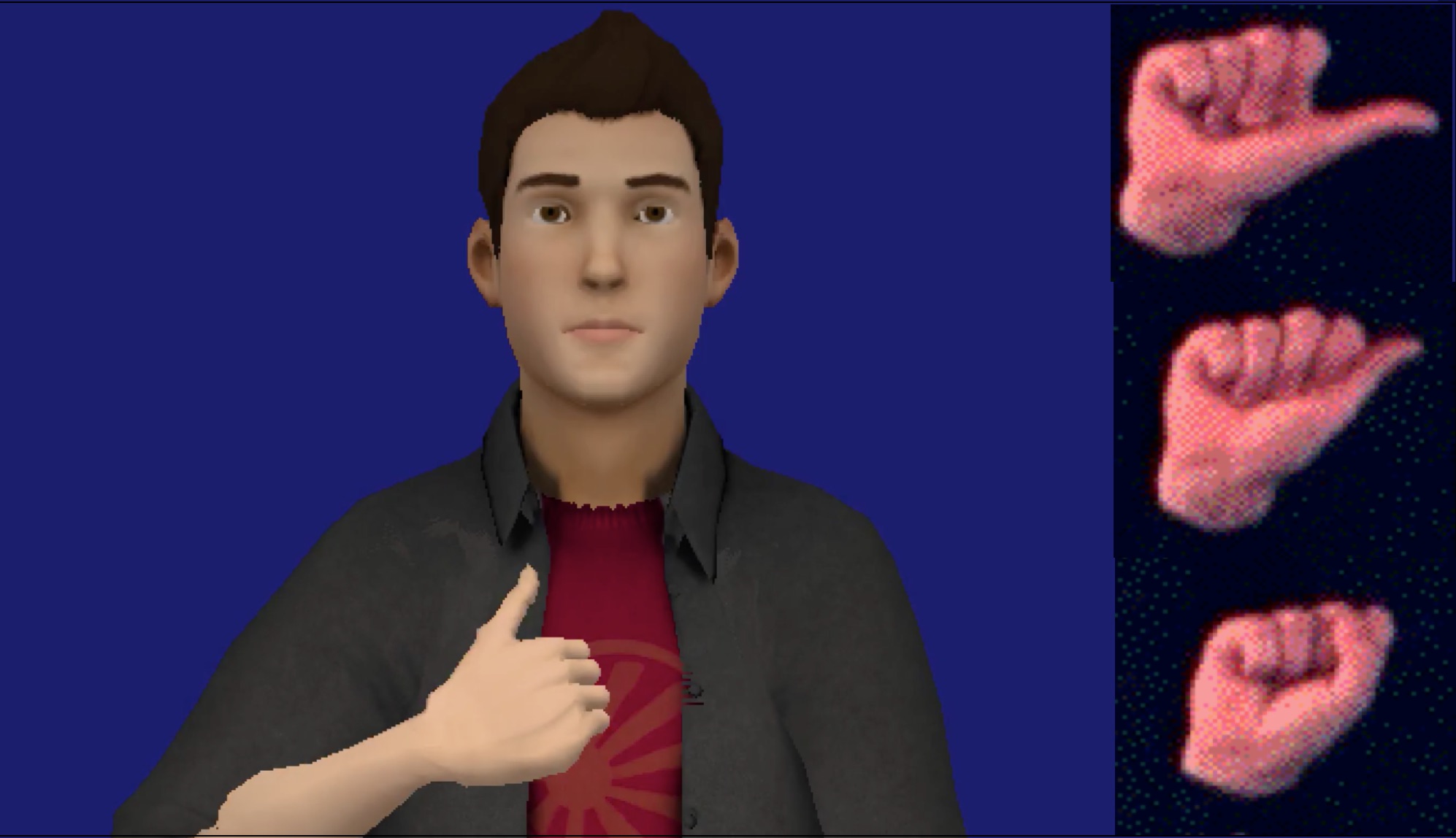

Animated virtual humans can produce a wide variety of subtle performances of American Sign Language, including minor variations in handshape, location, orientation, or movement. This technology can produce stimuli for display in experimental studies with ASL signers, to study ASL linguistics.

This project is joint work among Matt Huenerfauth and colleagues at NTID.

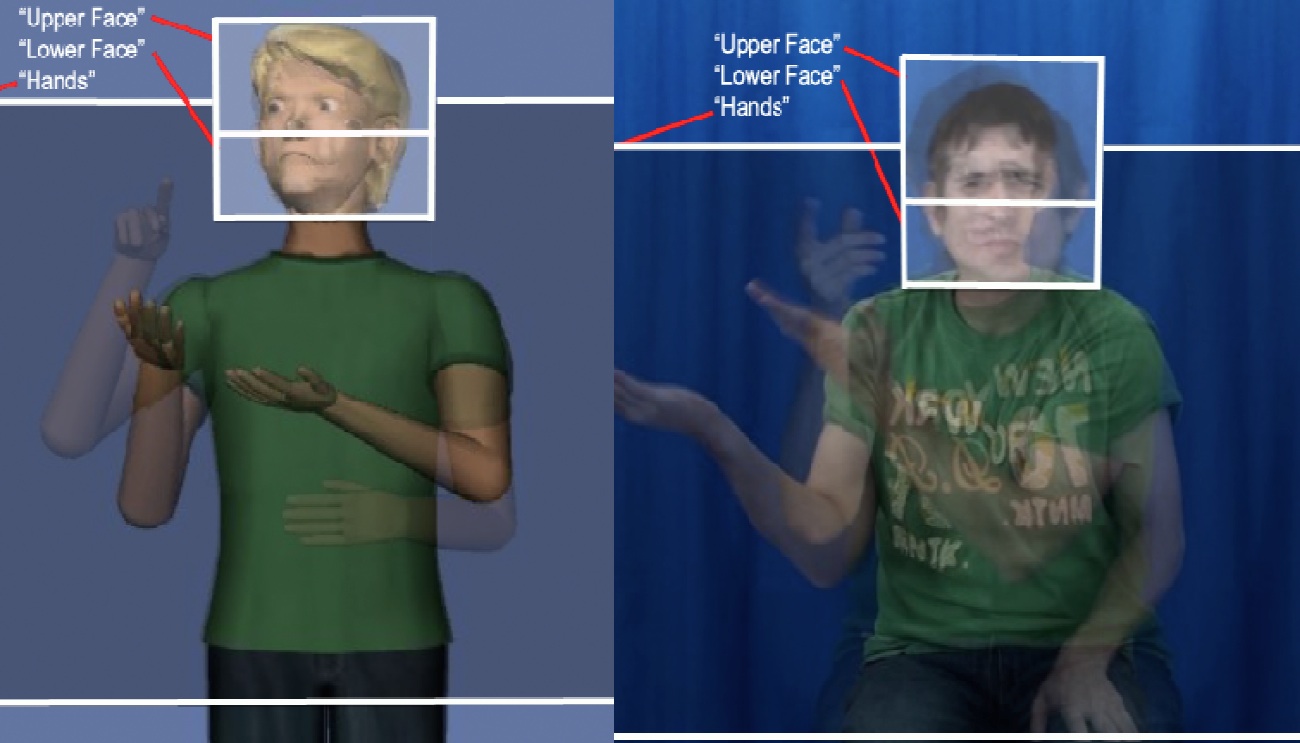

We have conducted a variety of methodological research on the most effective ways to structure empirical evaluation studies of technology with Deaf and Hard of Hearing (DHH) users.

This research has included the creation of standard stimuli and question items for studies with ASL animation technology, analysis of the relationship between user demographics and responses to question items, the use of eye-tracking in studies with DHH users, and the creation of American Sign Language versions of standard usability evaluation instruments.

This research is conducted by Matt Huenerfauth and his students.

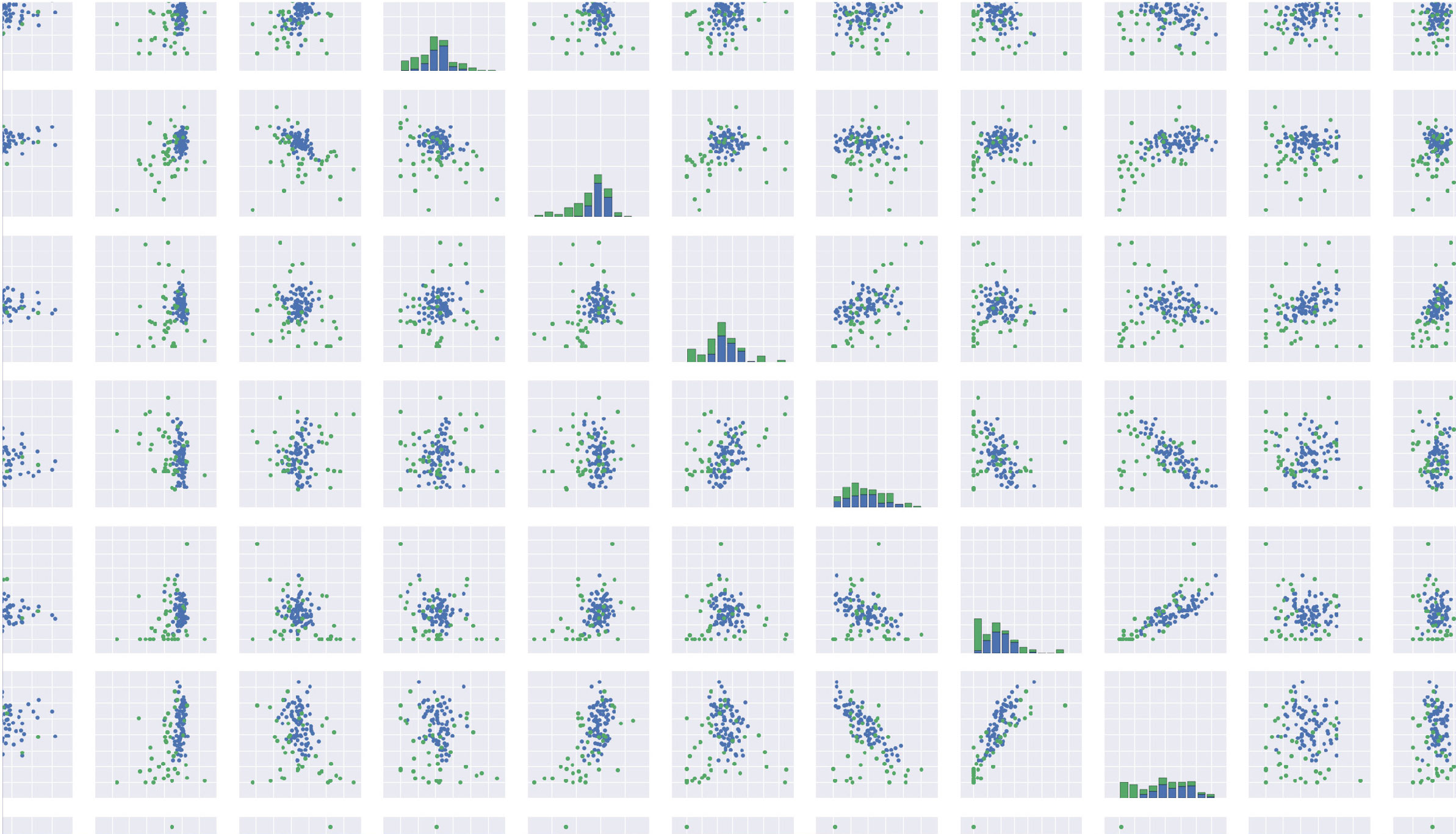

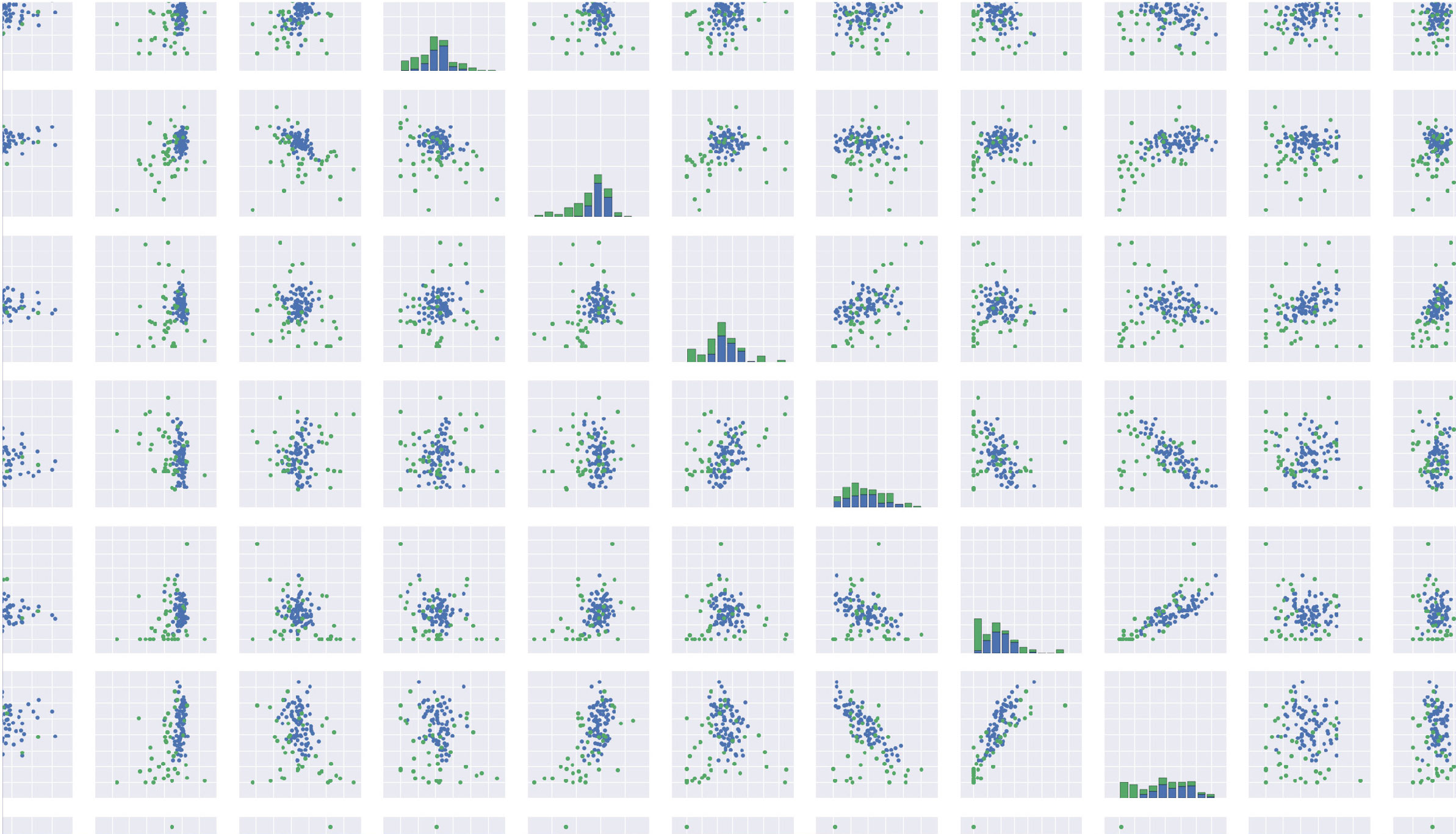

Computer users may benefit from user-interfaces that can predict whether the user is struggling with a task based on an analysis of the user's eye movement behaviors. This project is investigating how to conduct precise experiments for measuring eye-tracking movements and user task performance -- relationships between these variables can be examined using machine learning techniques in order to produce preditive models for adaptive user-interfaces.

An important branch of this research has investigated whether eye-tracking technology can be used as a complementary or alternative method of evaluation for animations of sign language, by examining the eye-movements of native signers who view these animations to detect when they may be more difficult to understand.

This project is conducted by Matt Huenerfauth and his students.

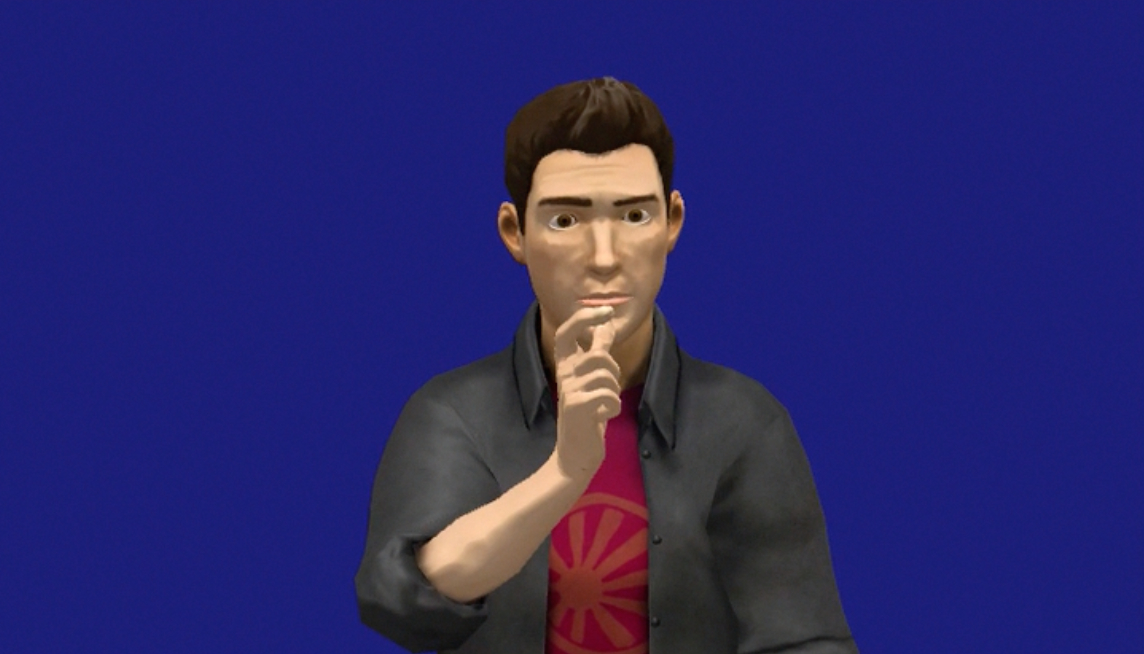

The goal of this research is to develop technologies to generate animations of a virtual human character performing American Sign Language. The funding sources have supported various animation programming platforms that underlie research systems being developed and evaluated at the laboratory.

In current work, we are investigating how to create tools that enable researchers to build dictionaries of animations of individual signs and to efficiently assemble them to produce sentences and longer passages.

This project is conducted by Matt Huenerfauth and his students.

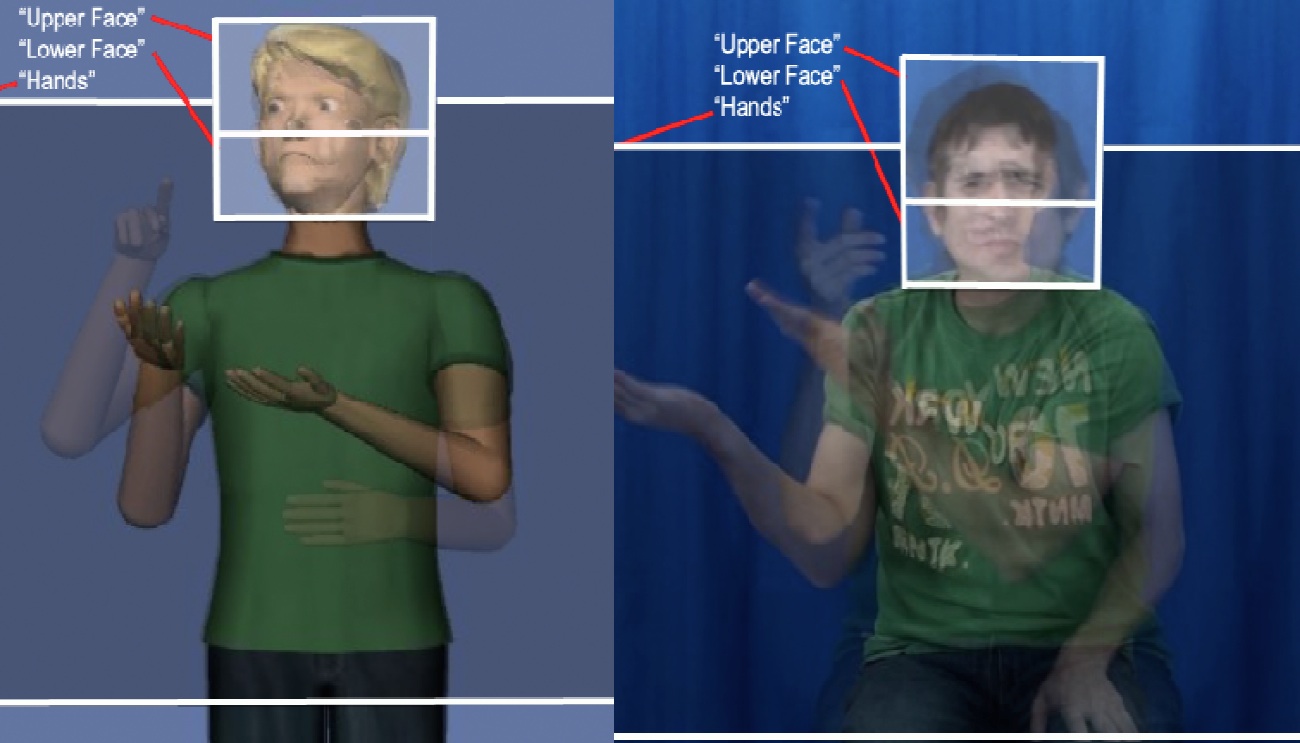

We are investigating techniques for producing linguistically accurate facial expressions for animations of American Sign Language; this would make these animations easier to understand and more effective at conveying information -- thereby improving the accessibility of online information for people who are deaf.

Matt Huenerfauth, PI. July 2011 to December 2015. “Generating Accurate Understandable Animations of American Sign Language Animation.” National Science Foundation, CISE Directorate, IIS Division. Amount: $338,005. (Collaborative research, linked to corresponding NSF research grants to Carol Neidle, P.I., Boston University, for $385,957 and to Dimitris Metaxas, P.I., Rutgers University, for $469,996.)

This research project has concluded. This project was joint work with researchers at Boston University and Rutgers University.

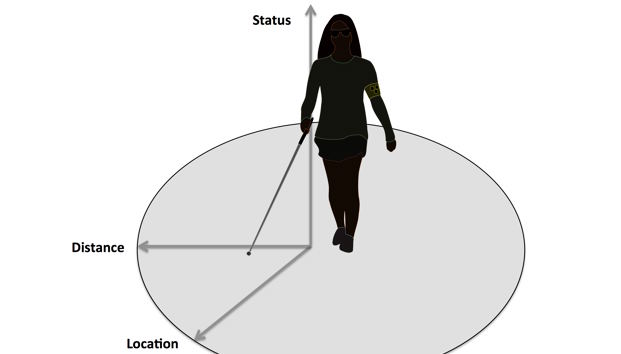

The current evaluation methods of Orientation Assistive Technology (OAT) that aid blind travelers indoors rely on the performance metrics. When enhancing such systems, evaluators conduct qualitative studies to learn where to focus their efforts.

This research project has concluded. This project was conducted by Stephanie Ludi and her students.

This project has investigated the use of computational linguistic technologies to identify whether textual information would meet the special needs of users with specific literacy impairments.

In research conducted prior to 2012, we investigated text-analysis tools for adults with intellectual disabilities. A state-of-the-art predictive model of readability was developed that was based on discourse, syntactic, semantic, and other linguistic features.

In current work, we are investigating technologies for a wider variety of users.

This research project has concluded. This project was conducted by Matt Huenerfauth and his students.

This project investigates the usability and utility of resources available to speech language therapists. By understanding the usability of existing resources, we design tools that give insight to the varied language characteristics of diverse individuals with non-fluent aphasia.

This research project has concluded. This project was conducted by Vicki Hanson and her students.

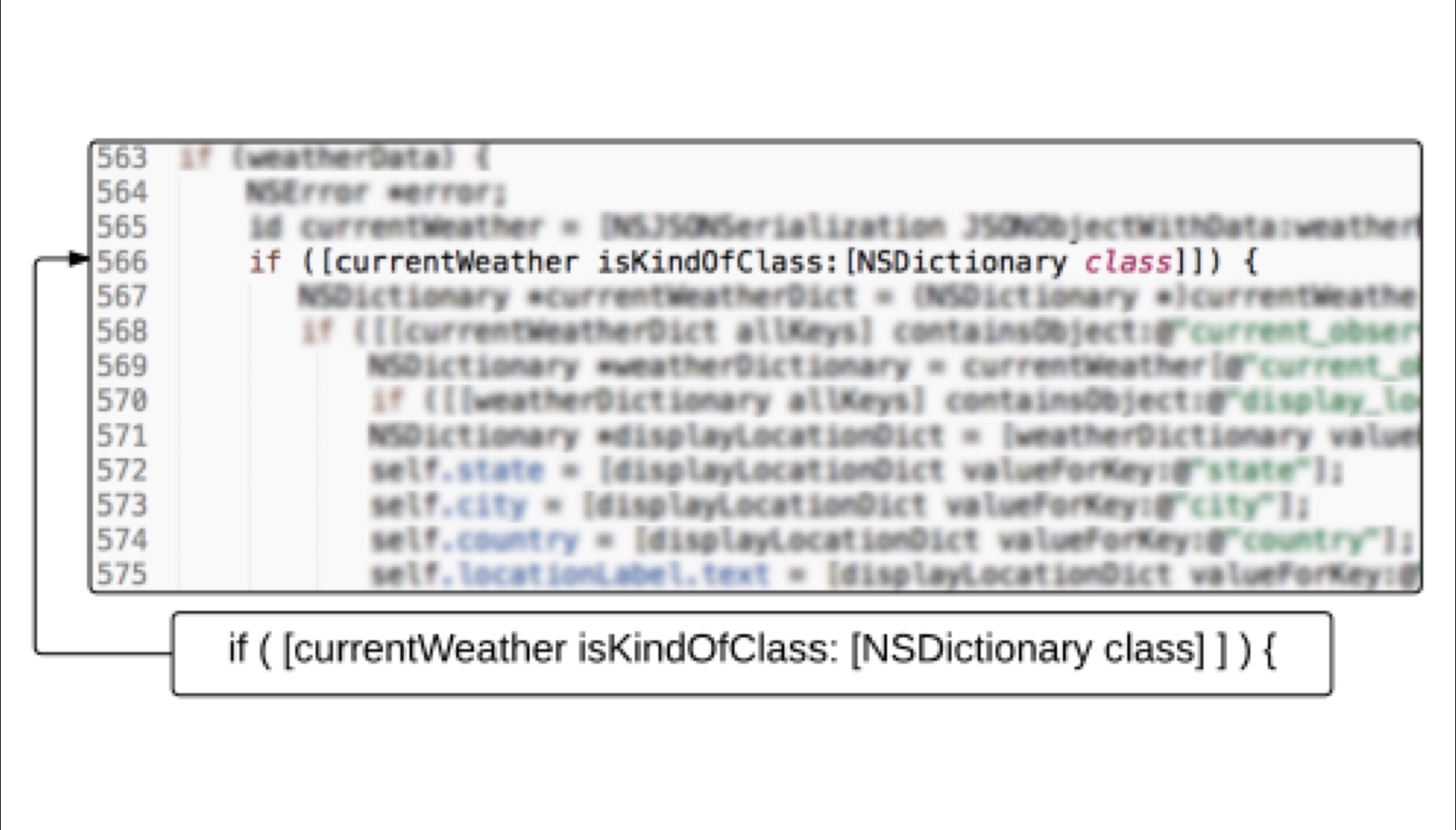

This project investigates what the difficulties are that blind computer programmers face when navigating through software code. By investigating what current tools these programmers use when moving through computer code and studying the work-arounds that many of these programmers use to make technologies work for them, we look for ways to improve this experience with new technologies.